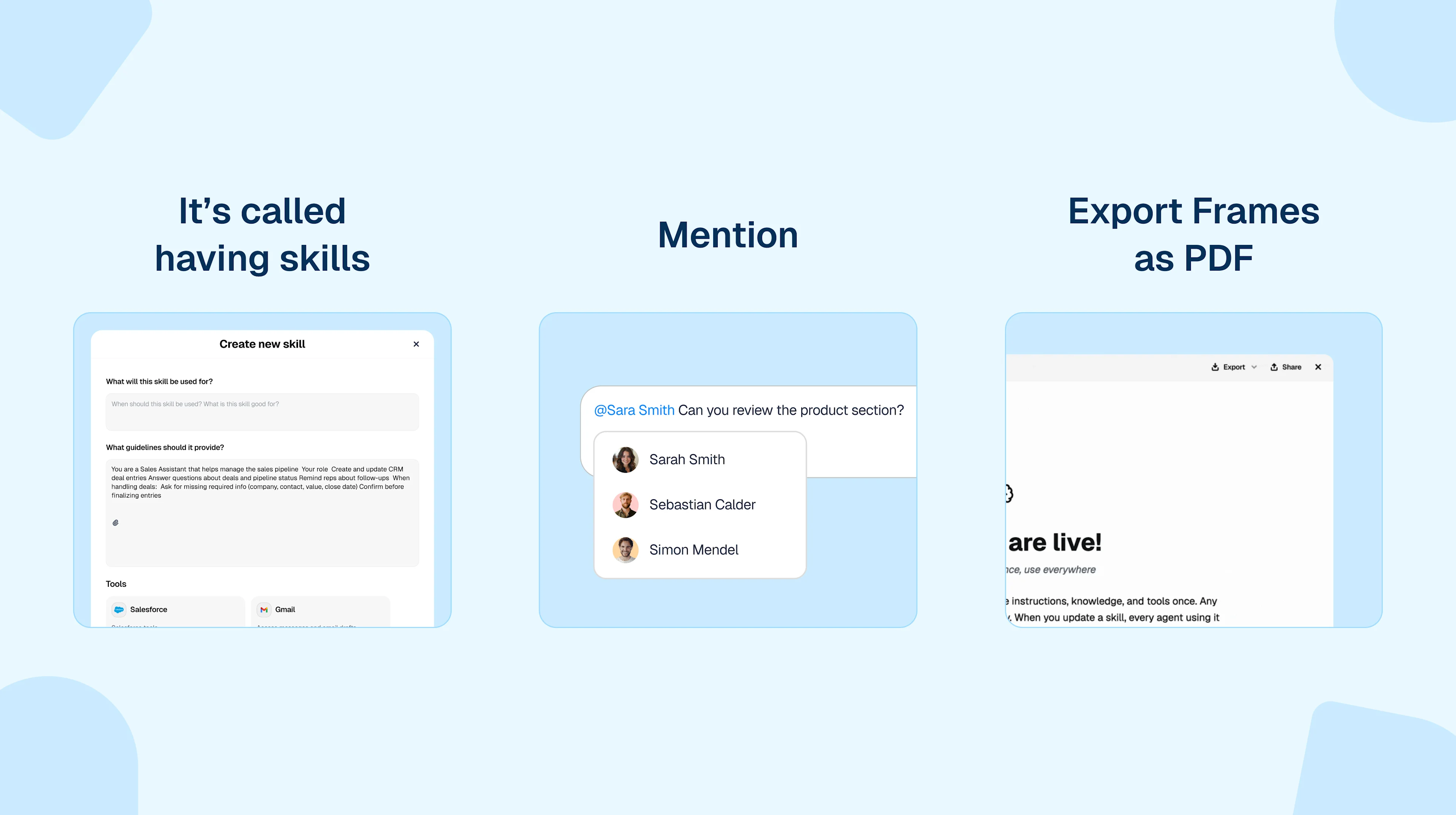

Why Dust incorporated voice mode in its Agent Management Platform

When we started seeing customers ask about voice capabilities—first a few scattered requests, then a steady pattern—we knew we needed to move beyond keyboard-only interactions. Not because voice is trendy, but because people work differently when they can just talk.

The problem was real: someone walking between meetings wants to capture a thought without stopping to type. A team in Tokyo wants to collaborate with colleagues in Paris without everyone switching to English. A product manager wants to turn research into a shareable podcast without spending hours editing audio.

We needed voice that worked at the speed of thought, in the languages people actually speak, with the quality that makes AI feel like a teammate rather than a tool.

What Voice Needs to Do in a Work Context

Voice for work is different from voice for consumers. A demo that impresses in a controlled environment can fail in practice when someone's on a noisy train, switching between languages mid-sentence, or needs the output to sound professional enough to share with customers.

We identified what mattered:

Quality that holds up to scrutiny. When someone generates a podcast from research to share with their team, or transcribes notes to send to a client, the output needs to sound natural and professional. Robotic voices and clumsy transcriptions break trust fast.

Multilingual by default. Our customers work globally. We needed a solution that could handle someone speaking French, English, and German in the same conversation—not as an edge case, but as a normal Tuesday.

Speed that doesn't break flow. Voice features that take 10 seconds to respond turn conversations into waiting games. For voice input, transcription needs to happen fast enough that you don't lose your train of thought. For voice generation, you need to be able to iterate quickly.

Enterprise-grade data handling. Companies need to know their sensitive conversations aren't being stored, analyzed, or used to train models. Zero data retention isn't optional—it's a requirement.

Why ElevenLabs

We evaluated several providers. OpenAI's Whisper and GPT models, Google's Speech-to-Text, Deepgram, AssemblyAI. Each had strengths. But ElevenLabs stood out for how it handled the complete picture:

Voice quality that people notice. In our internal testing, ElevenLabs consistently produced the most natural-sounding speech. The emotional range and expressiveness made generated content feel conversational, not synthetic. When we built our Speech Generator tool—which lets you turn text into single-voice or multi-speaker audio—the quality was good enough that people started using it for external content like podcasts and presentations.

True multilingual support. ElevenLabs' models support voice generation across 99+ languages and transcription across 70+ languages with automatic detection. More importantly, the quality holds up across languages. A voice cloned in one language automatically works in all supported languages. This matters when you're a French company working with German clients and American partners.

Enterprise features that work in practice. Zero Data Retention mode means sensitive content isn't stored on their servers. Multi-region support (EU and US) lets us route requests based on workspace location for both compliance and performance. SOC2 and GDPR compliance aren't checkboxes—they enable the procurement conversations that determine whether companies can actually use this.

Production-ready APIs. Their TypeScript SDKs integrated cleanly. The API is straightforward—you don't fight the interface to get it working. Error handling is clear, rate limits are documented, and the system is stable enough that we're comfortable offering it to all workspaces.

How It Works: Two Sides of Voice

We built ElevenLabs integration into Dust in two complementary ways:

Voice Input: Speaking to Agents

When you hit the microphone button in a Dust conversation, your audio gets transcribed using ElevenLabs' scribe_v1 model. The system automatically detects which of 29 languages you're speaking, transcribes it to text, and passes that to the agent you're talking to.

The interesting part is intent detection. Because people often speak more naturally than they type, voice input can be ambiguous about which agent you want. We built a layer that analyzes the transcription to figure out if you're mentioning an agent by name, even when the phrasing is casual. "Hey, could you ask the research agent to..." gets routed correctly without you having to invoke a specific command.

This works on mobile, which turns out to be critical. The times you most want voice input—walking between meetings, commuting, or away from your desk—are exactly the times when typing is hardest.

Voice Output: Agents That Create Audio

Through our Speech Generator and Sound Studio tools, agents can create audio content. This isn't about agents talking back to you (we explored that; it's not ready yet). It's about generating audio artifacts you can use.

Speech Generator uses ElevenLabs' eleven_multilingual_v2 model for single-voice content and eleven_v3 for multi-speaker dialogues. You can specify parameters like language, use case (narrative, conversational, educational), and gender. We curated 12 voices that cover different tones and accents, with intelligent fallback logic when your exact combination isn't available.

The practical use case: pair Speech Generator with our @deep-dive agent to turn comprehensive research into a podcast. The agent does deep research, structures the findings as a narrative, and generates audio you can listen to during your commute or share with your team.

Sound Studio generates sound effects using ElevenLabs' text-to-effects API. It's niche, but useful for creators, trainers, and anyone building content that needs audio elements.

All audio outputs use consistent formatting which means you don't have to think about compatibility.

What We Learned Building This

Regional routing matters more than we expected. When we added EU and US region selection, latency improved noticeably for European customers. It also simplified compliance conversations—data processing happens in the right jurisdiction automatically.

Voice selection is surprisingly nuanced. We initially thought more voices would be better, but customers got overwhelmed by choice. We narrowed it to 12 curated voices organized by use case and language, with smart fallbacks. This reduced decision paralysis while covering the real range of needs.

Quality still trumps speed for most use cases. We offer both the faster Flash model and the higher-quality Multilingual models. Customers overwhelmingly choose quality. For asynchronous content generation, an extra second of latency doesn't matter if the result sounds noticeably better.

What This Enables

The voice features we built aren't about replacing typing—they're about expanding what's possible:

- Mobile-first workflows. Capture ideas while walking, add context to projects from your phone, get answers when you can't pull out a laptop.

- Multilingual teams. Speak in your native language, let the system handle translation and routing to the right agent.

- Content transformation. Turn written research into audio format for different consumption contexts. Generate draft podcast scripts that you can iterate on.

- Accessible interactions. Voice input lowers barriers for people who find typing difficult or who think better by talking through problems.

The broader pattern: AI assistants work better when they're not confined to chat boxes. Voice is one interface—we're exploring others—but it's valuable because it meets people where they already are.

What's Next

We're not done with voice. Some things we're exploring:

Voice-to-voice conversations. Agents that can listen and respond with voice in real-time. The technology exists (OpenAI's Realtime API is impressive), but the cost and reliability aren't there yet for production use at scale.

Better context from audio. Right now we transcribe then process. There's potential in models that can understand audio directly—catching tone, emphasis, and emotional context that text transcription loses.

Longer-form audio processing. Currently we handle up to an hour of audio input. Some use cases need more—full meeting transcripts, recorded presentations, long-form interviews.

The goal isn't to make Dust a voice platform. It's to make voice one of many ways people can work with AI agents, with the same level of quality and integration they expect from every other part of the system.

Voice features in Dust are available now to all workspaces. Hit the microphone button to try voice input, or ask @deep-dive to generate a podcast from research.